What We Take for Granted

Our World Through AI's Eyes

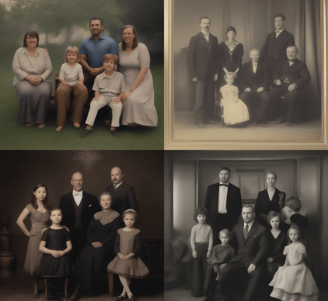

Explore how AI interprets the world through biased training data in a curated image gallery.

What Is AI Model Bias?

Generative AI models like Stable Diffusion and Flux are revolutionizing digital media, yet their visual outputs are embedding and amplifying societal biases. This project examines how popular open-source text-to-image models depict a variety of subjects—including people, objects, events, and locations—revealing implicit stereotypes and biases encoded through the data used to train them.

So how do biases in AI models present themselves?

Don't worry—I have a list.

Bias is flagging women in tank tops as "adult" content while shirtless men are not flagged, revealing how nudity filters disproportionately sexualize women’s bodies even in neutral contexts (Birhane et al., 2021).

Bias is CLIP misclassifying common household objects in non-Western or lower-income settings, such as failing to recognize a refrigerator in an African kitchen while correctly tagging one in a Western kitchen (Nwatu et al., 2023).

Bias is "doing household chores" returning women, while "handling finances" or "repairing a car" returns men, reinforcing traditional gender roles through occupational imagery in prompt completions (Girrbach et al., 2025).

Bias is East Asian women being depicted with Westernized features, dressed in kimonos, and shown in passive or submissive poses, regardless of the cultural or occupational context of the prompt (Lan et al., 2025).

Bias is anime-style image generation models feminizing male character prompts—a result of training sets like Danbooru that overrepresent female and characters, skewing gender expression even when prompts specify otherwise (Park et al., 2022).

Bias is LGBTQ+ content silently filtered out during dataset curation—as seen in CLIP-based pipelines, where queer-related tags were often excluded under “sensitive content” filters, reducing representation in training corpora (Hong et al., 2024).

Bias is Black and Latino men being labeled as "criminal" at higher rates than other races as training dataset sizes increase—indicating that scale does not reduce bias, and instead amplifies existing stereotypes (Solaiman, 2023).

Bias is models like Stable Diffusion absorbing hate speech, slurs, and sexual abuse content from the LAION-400M dataset and then surfacing toxic associations even in images generated from neutral prompts (Birhane et al., 2021).

You get the picture.

Bias is generative AI models producing images of men with lighter skin tones and women with darker skin tones when prompted with terms like "CEO" and "nurse," respectively. (Nicoletti & Bass, 2023).

Buy Why Are AI Models Biased?

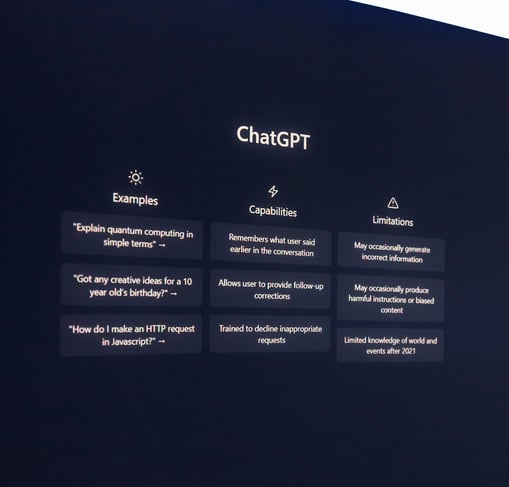

All generative AI models are biased, but not all bias is harmful. Some biases are both useful and necessary. The fact that Large Language Models like Claude, Gemini, and ChatGPT communicate with natural language as opposed to unintelligible gibberish is an example of a helpful bias in the training data that enhances the utility of these tools. However, harmful biases are much more insidious, being both harder to recognize and sufficiently mitigate.

Bias in AI image generation is a multifaceted problem, one that's rooted deeply in dataset composition, tagging practices, annotation labor disparities, and model architecture. Open image datasets such as LAION contain large amounts of inadequately filtered content that amplify explicit biases and stereotypical representations (Leu et al., 2024). Classifier models further propagate bias through automated tagging decisions, frequently defaulting to problematic portrayals such as hyper-sexualization and racial stereotyping (Park et al., 2022).

These biases are often magnified rather than diminished through successive generations of models (Solaiman et al. 2023), and as generative AI becomes increasingly more mainstream, these models' outputs may deeply impact digital representation in educational, archival, and broader everyday life contexts.

How Do We Assess Bias in AI Models?

Well, you have to look at a lot of pictures and analyze a lot of text.

This project examines the outputs of three significant open-source generative models—Stable Diffusion 1.5 (SD1.5), Stable Diffusion XL (SDXL), and Flux.1 (Flux)—to generate datasets for each selected prompt category. These categories include a broad range of commonly photographed and imaged subjects, such as people, events, meals, activities, and locations. The prompts are broad and generalized (e.g., "a person," "breakfast") to get a clearer view of how these models handle basic concepts.

Each prompt is used to generate 512 images (an arbitrary homage to SD1.5's native resolution) (Rombach et al., 2022), which are then composited to produce an "average" visual representation. Individual images are also assembled into detailed mosaics designed to showcase how internal variations statistically disappear when viewed in aggregate. Automated tagging tools analyze these images to surface underlying metadata biases, while statistical analyses identify systematic patterns and discrepancies. In some cases, the "average" or "median" image may be instead produced by analyzing the most common labels per subject to produce a representative prompt.

© 2025 E'Narda McCalister. All rights reserved.